The first amendment. Article 19. Freedom of Expression. The ability to say what you think without fear or unlawful interference is known by many names. It’s perhaps one of the most valued rights across democratic societies, but many of us take it for granted. Is it possible that AI is threatening our freedom of expression?

No right is absolute. Like all rights, the right to freedom of expression is limited to protect others from abuse, ensure national security and limit obscenities (among other things). Censoring has always occurred and takes many forms.

Emma J Llansó differentiates between manual and automated censoring. For manual censoring individuals on the internet flag content which should be censored, human moderators then view the content and determine whether this is the case. The process is often slow, inconsistently applied and can expose moderators to trauma by experiencing the worst of the internet. Furthermore, manual systems are vulnerable to being overrun, such as in the immediate aftermath of the Christchurch shooting in 2019 when copies of the shooter’s video were uploaded with extreme frequency across YouTube.

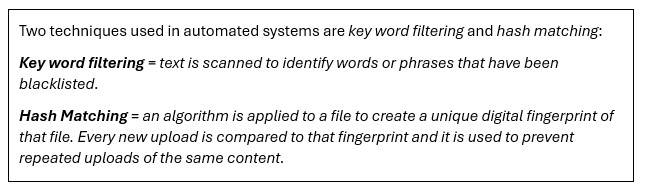

It is these flaws in the manual system which push individuals like Mark Zuckerberg to promote the use of automated systems. AI provides governments and tech companies with a cheap and quick way to censor platforms, avoiding many of the problems posed by manual systems. Facebook and other social media platforms have already widely implemented automated systems which use AI. These are known as ‘type 2 content moderation systems’ and can pre-emptively detect not just existing categories of problematic content (e.g. nudity, child pornography etc.) but also more subjective categories like hate speech, incitement and harmful disinformation.

The problem with these automated censoring techniques is that they bare strong resemblances to the legal concept of prior restraint. This means that speech or content is blocked before it has even been expressed. Liberal and democratic societies have tended to ban prior restraint as it creates a serious interference with individuals’ right to freedom of expression. For example, requiring newspapers to undergo checks before publishing stories would be unthinkable in most liberal societies, but if a newspaper published an article which infringed any laws, then it would be prosecuted after the publication.

Despite these legal presumptions against prior restriction, governments are encouraging rather than restraining the use of prior restraint in AI based filtering systems. There are plans across the US and Europe to encourage the use of “upload filtering” (tools to automatically block content from being uploaded) across social medial platforms. In fact, recently, the social media platform ‘Parler’ was removed from the apple store for its failure to moderate content, the condition for re-entry was to adopt AI-based content moderation tools.

Its understandable that governments would look to automated censoring systems as manual censoring systems reliant on humans just can’t possibly work through the huge amount of content that exists. However, there are problems created by these automated censoring systems which must be addressed:

Over-censoring

As the decisions are made by AI algorithms, the algorithm does not look to understand what is being said. Rather it often looks to the syntax and vocabulary used in hate speech, for example, rather than an estimation of whether the speech is actually designed to incite violence.

Furthermore, algorithms can be vulnerable to over-censoring and a lack of precision where the training data is limited or not trained broadly enough. For example, where there is an under-representation of certain speakers in training data.

Lack of Clarity as to what is being censored

Unclear definitions as to what should be censored can result in over-censoring. Determining the boundaries of what we want to censor as a society should also be decisions subject to public discussions and not determined entirely by social media companies etc.

Not subject to public scrutiny

Automated censoring systems are inherently un-transparent. Individuals are rarely, if ever, notified when their content is censored. As mentioned, content moderation systems apply filters in non-transparent ways. For example, Facebook, YouTube, Microsoft, and Twitter created a shared hash database where the participating companies contributed hashes of what they consider to be terrorist propaganda. When users content is blocked due to this database, this is not disclosed to users.

It’s clear that AI censoring is quite a powerful tool which must be used with the upmost caution. Current problems associated with automated censoring systems threaten our freedom of expression through prior restriction. To diminish the negative impact of these technologies on our fundamental rights, users should be notified when content they have uploaded is censored. The Facebook Panel also suggested that individuals should be given the right to a human decision. This would fall in line with more general AI movements towards explainable AI and what the EU has begun to require through the GDPR and more recently the EU AI Act. While these steps may not prevent AI filtering systems from implementing prior restriction, they do provide a step forward to ensuring individuals rights are protected.

Written by Celene Sandiford, smartR AI

Image source: https://deepai.org/machine-learning-model/text2img