I recently had the privilege to speak to Lord Christopher Holmes of Richmond (‘Lord Holmes’), Britain’s most successful paralympic swimmer and the creator of the Artificial Intelligence (Regulation) Bill currently making its way through the houses of parliament, to find out: what’s new in the world of AI regulation?

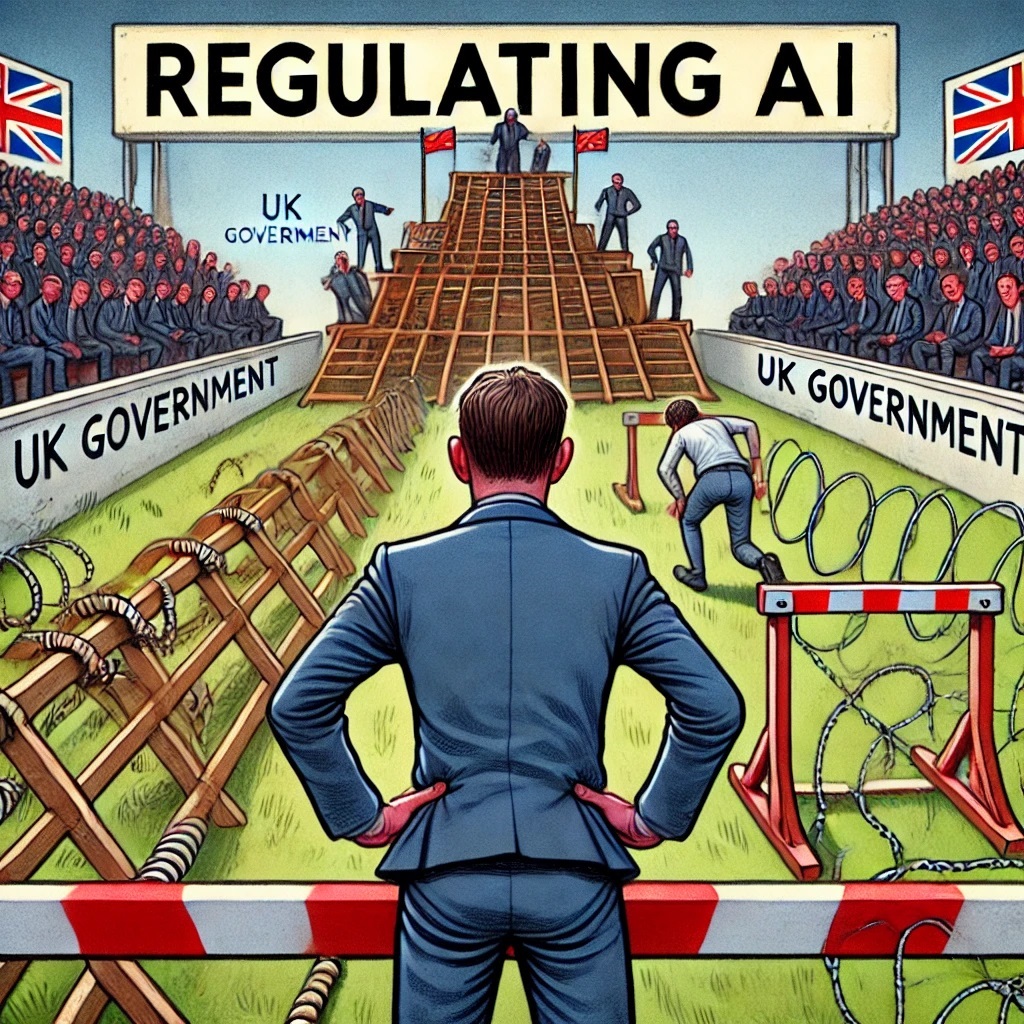

Backpedalling on Pro-Innovation?

I asked Lord Holmes whether, given his AI Bill has made it to the third reading of the House of Lords, he believed that the government was back-pedalling on its pro-innovation approach. If there is one thing that Lord Holmes made clear throughout the entire interview it is that being pro-innovation is not equivalent to not regulating.

LH: “There is a fundamental misconception in government, both current and previous, who, wrongly in my view, espouse that a pro-innovation approach necessitates doing very little or any regulation. To my mind, all of history demonstrates to us, particularly recent history, that right size regulation, is both good for consumer protection, good for innovator and good for inward investment.”

Therefore, Lord Holmes insists that if the government were to regulate, it does not necessarily mean it would not be taking a pro-innovation approach. In fact, he suggests that the certainty provided by “right sized, clear, consistent, regulation” does in fact encourage innovation and is consequently, pro-innovation.

With regards to whether the government was back peddling on its plans to legislate, Lord Holmes explained that while the current government was positive about the Bill when they were in opposition, since coming into government they have stepped backwards in their support. Rather than pushing for legislation, as one might have expected from their initial reactions to the AI bill, according to Lord Holmes the current government have made no mention of an AI legislation in the Kings speech and have not, as of yet, produced any AI legislation.

Lord Holmes predicts that the government may formulate a “very narrowly drawn bill” which might focus on the largest existential threat and west coast American big tech. While he acknowledges that there is a need to consider these elements:

LH: “That is but one part of the AI landscape. It’s abundantly clear that we need a cross-economy, cross-society, horizontally focused AI bill. Currently, from this government there is no prospect of that.”

Sector-specific or too specific? All the governments white papers, commitments, and plans until now have confirmed they plan to take a sector-specific approach, meaning they will let each sector regulate AI for itself. The arguments in favor of this approach include that: it allows for a quicker response than creating a broad policy; each sector is best-placed to combat issues presented due to the unique way AI affects each sector; and, that the effects of AI will be linked with other behavioral changes which might make it difficult to unpick the specific impact of AI. Yet, Lord Holmes’ bill is cross-sector, it seeks to address the challenges posed by AI through a horizontally focused approach and a small nimble AI authority.

LH: “I think it is inconsistent and incoherent to have on the one hand, a government that talks about the all-pervasive presence and power of AI… and then seek to take a domain (or sectorial) approach to such a ubiquitous element in our economy, in our society.”

Lord Homes argues that failing to take this approach will result in two negative outcomes, a lack of confidence in the consistency of the approach and gaps where there is simply no regulator. To these negative outcomes I would add the challenges presented by a regulatory approach that is too product-oriented and fails to grapple with the uncertainties of emerging technologies like AI. For example, if we focus too greatly on regulating autonomous vehicles like we do manual vehicles, then we risk failing to address the inherent risks present in a technology which learns and adapts over time. Such as the vehicles potential failures to perform in unpredictable weather patterns or unusual situations.

Another difficulty posed in regulating through a sector specific approach is the general lack of AI expertise across government. This makes the sector-specific approach particularly difficult to implement, as each sector requires access to its own AI experts, a resource that is quite frankly, scarce. I challenged Lord Holmes on how his Bill sought to redress this crucial point:

LH: “What is envisaged in my Bill is that the AI authority is that nimble, agile, crucially horizontally focused AI regulator… it should also be the centre of expertise… Learning from each other as an AI community of being available across all of our AI regulators, rather than each unit having to somehow put together their own provision.”

How does the UK’s approach compare?

Given Lord Holmes staunch opposition to the sector-based approach, I wondered what he might think about the EU’s AI act and regulatory approach. While graciously acknowledging the valiant attempt to be the first significant regulator in this industry and that the act is certainly a “creature of that legal code”, he did raise concerns regarding the clarity of redressal.

LH: “To take a large product safety approach to something such as AI is tricky. I think there are going to be a number of issues around the ability of citizens and businesses in certain contexts, to understand the route to redress and who is the competent authority to redress that to.”

Yet, in comparing the UK’s approach to the EU’s, Lord Holmes expressed concern that the UK is already behind the curve:

LH: “If we don’t take the opportunity to legislate with our tech ecosystem, with our financial services sector, with our fabulous higher education, with our spin outs/start-ups/scaleups, and fundamental good fortune of English common law. If we don’t take that opportunity, we will not optimise the benefits and we will not be fully cognisant of the burdens inherent in all of the AI technologies.”

Health: NHS data or my data?

In speaking about the gains and risks of AI, I sought to enquire how Lord Holmes thought these should be balanced in healthcare; an area where we have the most to benefit and to potentially lose. Lord Holmes was very understanding as to why the public may feel “queasy, suspicious and often opposed to sharing their data”. He acknowledged that our healthcare data is essentially “who we are as humans”.

For these reasons, he prizes Clause 6 of his Bill, promoting public engagement, as the most important. Lord Holmes emphasizes that in order for AI to be successfully integrated into society, we need to have “cross-cutting and universal public discourse and debate around AI”.

LH: “We know how to do this, we know how to get this right, we created invitro fertilisation decades ago. What could be more science fiction than bringing human life into being in a human test tube, why is it seen as not only a positive part of our society now, but also a faded into background part of our society? Because a colleague of mine, the late Baroness Warnock, had a commission, went around the country and talked to people, had those discussions, had that debate. We need something on that scale and more, because the potential is immense.”

The way that we end up regulating AI will absolutely be shaped by societies perception of it. If these new technologies are pushed without public engagement and without meeting public expectations, we risk getting the same kind of pushback to these technologies as was faced with Genetically Modified Crops (GMT). We cannot risk that kind of reaction, because whether we like it or not, we need AI. More specifically, the NHS needs AI.

LH: “Be in no doubt, it’s only through AI, blockchain and these other technologies, human led, human consented and human understood, that we are going to get the NHS that we need, the healthcare that we need as an ageing population.”

At this point I expressed to Lord Holmes, that as an ordinary citizen, it feels as though a lot of these decisions are so far from our control. The Prismal V Google case challenged the Royal Free London NHS Foundation Trust’s transfer of 1.6 million patients NHS data to Google DeepMind, to create an AI application for the detection of liver disease. Prismal’s failure to bring a claim through representative action exemplifies not only the difficulties in challenging these decisions, but also in maintaining control of our data.

LH: “There is a bit of an odd conversation which goes on where people talk about NHS data, the huge possibility of NHS data, what we could do with NHS data. But what is NHS data? It’s not this perfectly formed, fabulous, clean up to date, shiny data set…It’s more complex than that, it’s fractured, it’s more in pieces than that, and crucially, it’s each and every citizen’s data which is constituent parts of what is called NHS data.”

Are start-ups getting the same opportunities?

Finally, I asked Lord Holmes not just on behalf of smartR AI but of all of the smaller players in the growing world of AI, how he would address the unique challenges facing small businesses confronted with the Silicon Valley tycoons. Lord Holmes expressed concern at the way that the current government were heading “down the track into winner takes all, cornering the market, big oligopolistic market domination”.

LH: “We should be looking far more at what are the situations for startups, scaleups in this country in AI and all of that tech sectors. And doing everything to provide that ecosystem, enabling, empowering all those start up scales, because that’s where the real innovation, that vibrant ecosystem and transformation occurs. It won’t come from huge organisations who not only very rapidly become monopoly players, but through that and the approach that they take, there is so very little of what you can truly call innovation.”

Lord Holmes was keen to point out that his bill was subject to proportionality principles, thus, none of the provisions fall heavier or as heavy on small entities as they would on multinationals. Furthermore, he argued that the government must make real changes in the way government procurement works to enable small and medium-sized entities to have an equal-opportunity at winning these contracts.

LH: “It is absolutely critical that both within the legislative framework, but in the broader approach of government, there is a real commitment to startups, scaleups, small entities and not least because they are the largest part of our economy. Our economy is not made of big businesses, its largely made of small entities. They are the innovators, they are the income generators, they are the employers largely in this country.”

A Personal Comment

As an individual interested in AI regulation, I have been following the government’s discussions, white papers and announcements over the last couple of years. With the government taking what is essentially a ‘wait and see’ approach, I haven’t been given all that much reason to rejoice at their actions. Lord Holme’s Bill changes things. While I can’t say it’s a fix-all solution, I do think it takes a really positive step in the right direction and covers a lot of crucial considerations such as public engagement, sharing AI expertize, and ensuring a consistent approach.

Written by Celene Sandiford, smartR AI