Internet forums are rife with parents completely frustrated with their child’s apparent lack of common sense.

‘She knows she has to wash her hands after she uses the toilet, but she also washes her hands after using the shower.’

‘He knows he has to wait to cross the road, if he forgets he stops and waits in the middle of the road’.

Having recently spent some time overhearing conversations children have with their parents, I’ve noticed there is constant questioning about the world around them. Questions which can often be answered with common sense. We should note that a lack of common sense isn’t limited to children, certainly there are some adults lacking in this department. Consider how often the phrase ‘Hold my beer, I’m going to…’ has preceded disastrous decisions made by adults who should know better.

Yet, on the whole, if children (and most adults) are eventually able to learn common sense, why does Artificial Intelligence struggle to do so?

There is consensus amongst the AI community that AI completely lacks common sense. As Yejin Choi has put it AI is “shockingly stupid.” An AI medical diagnosis system did not realise it was given car data when it diagnosed a car with measles. Alexa challenged a child to plug a charger half-way into the electric socket and touch a penny to the prongs. Most adults could tell you that a car cannot have measles and a child should not play with electricity, but defining the exact boundaries of where common sense comes from or involves is a challenge.

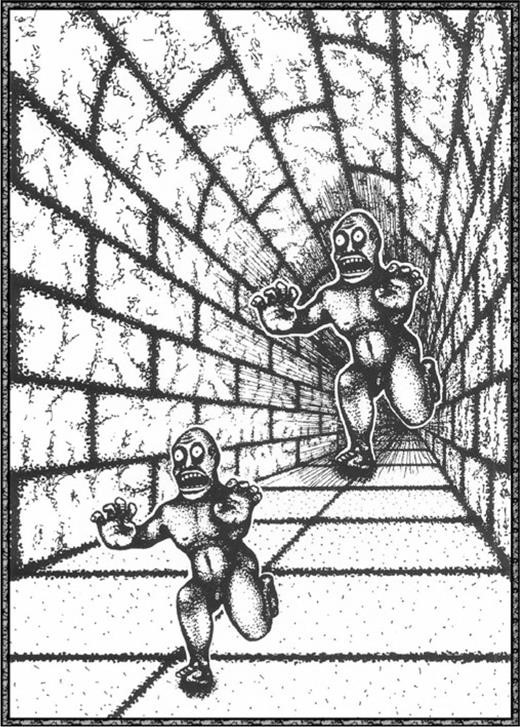

Yejin Choi offers a concrete example for why AI is still far from reaching human-level intuitive reasoning on common sense through Roger Shepard’s Terror Subterra (in the image below). An AI would be successful in identifying the content of the visual scene, two monsters in a tunnel. Yet, humans see beyond the pixels and can interpret an entire story behind the dynamic.

Using our human-level intuitive reasoning, we probably even perceive that the monster doing the chasing is larger than the other, whereas both monsters are actually the exact same size.

We draw these inferences from our deep and complicated view of the world, remarkably, without even considering less likely inferences. Such as that the monsters are running backward or swimming in the ocean. We generate these inferences instantaneously. In contrast, an AI based on machine learning would go through all the possible labels one by one and chose the label with the plausibility. This is effective for a narrowly defined task. But when it comes to something as broad as common sense and forming human-level intuitive inferences, Choi suggests that natural language and open-text descriptions work best to communicate between humans and machines.

The DARPA US research defence strategy is also trying to improve the implementation of common sense into AI programmes with a two-fold strategy. The first of these is create a service that works like a child to learn from experience to construct computational models that mimic the core domains of child cognition for objects: (intuitive physics), agents (intentional actors), and places (spatial navigation). The second strategy involves developing a service that learns from reading the web, also known as web scraping.

Despite the best intentions, as of yet AI is not able to grasp the nuances of human common sense. It is thought that the absence of common sense presents the greatest barrier between the AI applications we see today, which take the form of narrow AI with limited applications, and the general AI applications that are hoped for in the future. For now, we must content ourselves with teaching common sense to our children, rather than our AIs.

Written by Celene Sandiford, smartR AI

Image credit: https://deepai.org/machine-learning-model/text2img